Docker

Life without Docker

- Docker provides us abstraction, abstraction allows us to work with complicated things in simplified terms.

- If you are a big Infrastructure, so might not want to deploy hundreds of virtual machines for all of your hundred applications

- Docker and container are like app stores for mobiles devices installation, management and removal all has become very easy.

- Docker does abstraction to make compaitibility (Kernel)

- Isolation has become easy compared to VMware

- Cost effective

- Security Enhanced compared to VMware

What is Docker

"Build, Ship, and Run Any App, Anywhere"

An open-source project that automates the deployment of software applications inside containers by providing an additional layer of abstraction and automation of OS-level virtualization on Linux.

In simple, Docker is a tool that allows developers, sys-admins etc. to deploy applications in a sandbox (which in docker world we call it containers) to run over the host operating system.

OR

Docker makes running our app inside of containers really easy where containers are fast lightweight virtual machines.

OR

A layman Definition, Docker platform which packages a service into a unit(image) say standardized unit, and everything is included in that unit to make that service run.

Edition -

Community Edition (CE):-

- Open Source

- Lots of Contributors

- Quick release cycle

Enterprise Edition (EE):-

- Slower release cycle

- Additional features

- Official support

Before learning more about Docker, lets talk about little bit about container, image and Dockerfile :

Container - A container is a running instance of an image.

Image - An image is a unit that contains everything ( the code, libraries, environments variables and configuration files) that our service requires to run.

Dockerfile - Just assume it as a blueprint for creating a Docker images, it can inherit from other containers, define what software to install and what commands to run.

Platform Availability

* Linux

* Mac

* Windows

* Windows Server 2016

Docker on Linux

There are various ways to install Docker like two mentioned below.

Click here to follow official installation page of Docker.com.

Ubuntu

https://docs.docker.com/install/linux/docker-ce/ubuntu/or

$ sudo apt-get update

$ sudo apt install apt-transport-https ca-certificates curl software-properties-common

$ curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -

$ sudo add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu bionic stable"

$ sudo apt update

$ apt-cache policy docker-ce

$ sudo apt install docker-ce

- Debian

https://docs.docker.com/install/linux/docker-ce/debian/

- One command install

wget -qO- https://get.docker.com/ | sh

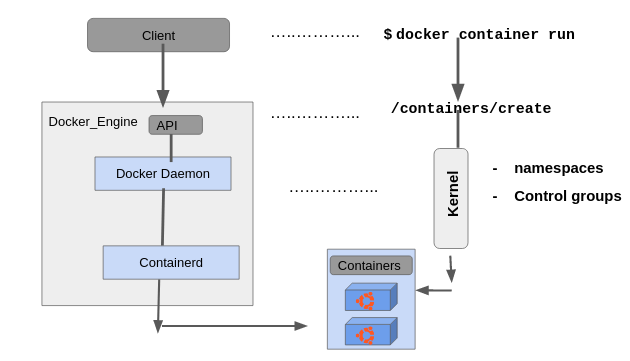

Architecture

Docker uses a client-server architecture. The Docker client talks to the Docker daemon, which does the heavy lifting of building, running, and distributing your Docker containers. The Docker client and daemon can run on the same system, or you can connect a Docker client to a remote Docker daemon. The Docker client and daemon communicate using a REST API, over UNIX sockets or a network interface.

Click for animated view of Docker Architecture

Click Here

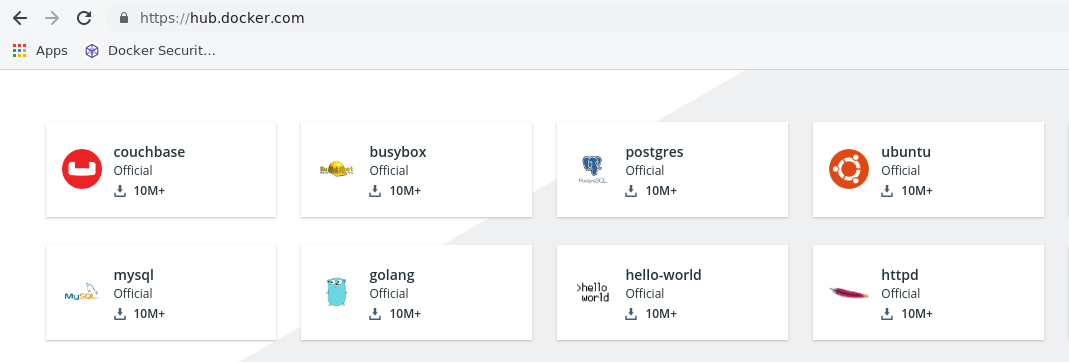

Docker Registry is a hub where you can download/pull images public, private, official and images by people like us.

Docker in DevOps

We keep hearing about DevOps these days and people have this misunderstanding of Docker to be DevOps. So I thought why not to explain the Docker commands in terms of Dev and Ops. It might help you understand difference between what is DevOps and Docker.

So I'll be dividing the commands into two categories Dev and Ops. Starting with Ops work

Ops based Docker Commands:

Ops : In this we will do operations like, downloading an image(say pull it from registry), starting a new container, log in to the new container, running commands inside of it and destroying the containers.

Back to topic :

I have fresh installation of Docker over my Ubuntu EC2 instance. So,lets see some Demo of what we learnt about Docker Architecture in theory.

To start, you may use Docker help mannual by typing

$ Docker --help

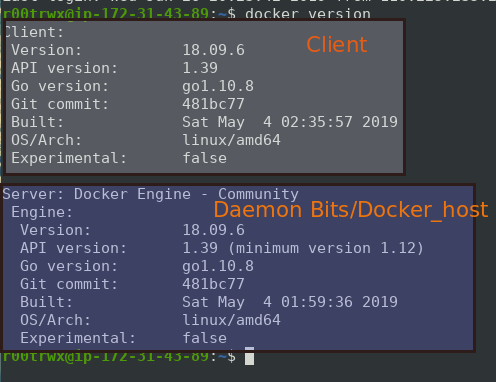

Docker has two major components:

* Client

* Daemon(engine, host, server)

Now to see both Client and Host available in our system type:

$ Docker version

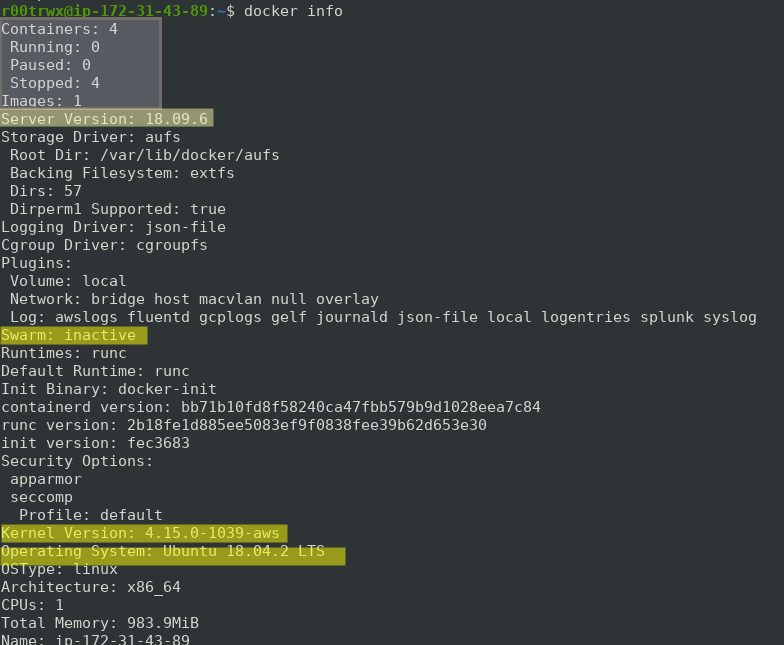

Another command to get more information about Docker is

$ docker info

In above output, see at the top, its also shows about how many images are there, number of containers and other information like running, paused, stopped.

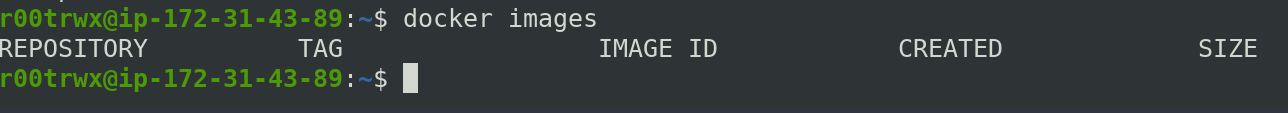

Another way to see number of images is use Docker images commands.

lets follow the Architecture explained above, lets start with client command Docker run <images>

But you must know which image you want to run. In my case, we will be running ubuntu image as I have mentioned in our Architecture above.

$ Docker images ls

or

$ Docker images

here, you will see that we don't have any images in our Docker_host, Now suppose we want to run ubuntu image with command but there is no images in the Docker local Repository.

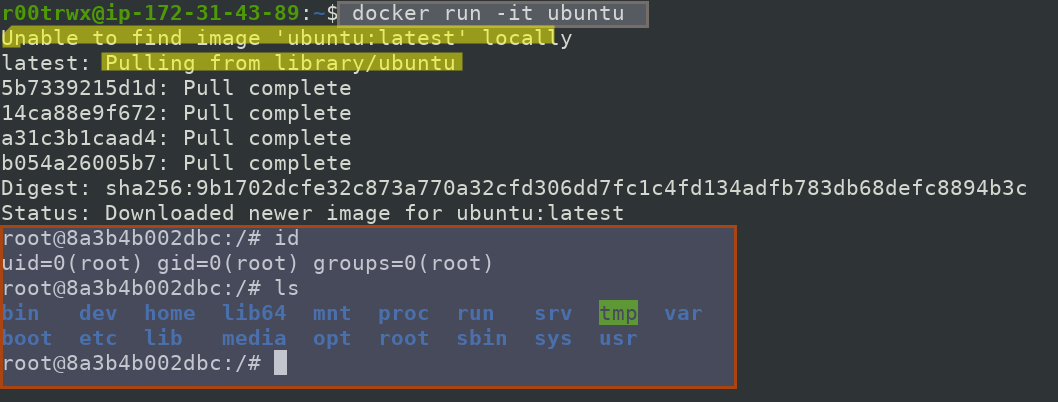

So if you remember the slides, 2nd slide did not have any ubuntu image. So this time it will fetch it from Docker_Hub registry.

First it will pull the image from the registry and then save it to Docker_host/local repo.

There are various ways to do that. Run the images directly, it will pull it and will then run it or you may first pull it and run it later on.

$ docker run -it ubuntu

Here,

-it : -i is used for bash interactive mode and -t tells it to attach our current terminal to the shell of the container.

Now in above image, see that first of all docker client is not able to find ubuntu image in local image repository, then it first pulls the images from Docker_hub library as explained in above architecture, and at the end gives us a interactive bash shell.

if you notice, the shell has changed to root. Now we are inside our ubuntu docker instance.

Now if you want to your container to be in running state in backend and you want to get out of the docker ubuntu shell.

Press cltr PQ, It will take you out of the docker instance.

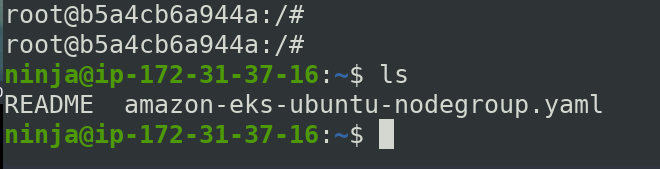

Note : Here container id and user has changed because I made this gitbook in two parts.

Now if you want to again jump back to ubuntu container, then follow below command by entering container id. You may check container id with below command

$ docker container ls

Now to get back to docker instance

$ docker run exec -it b5a4cb6a944a

Now lets exit from the container and learn how to stop, rm and delete the image.

To stop container

$ docker container stop b5a4cb6a944a

To remove container

$ docker container rm b5a4cb6a944a

To remove image from local image repository

$ docker rmi b5a4cb6a944a

Devs based Docker Commands:

Now we are developers, so the task of developer is to develop app, put it to version control and containerize it using Docker, looking into Dockerfile etc.

So lets assume that we have our application already built. So its time use git .

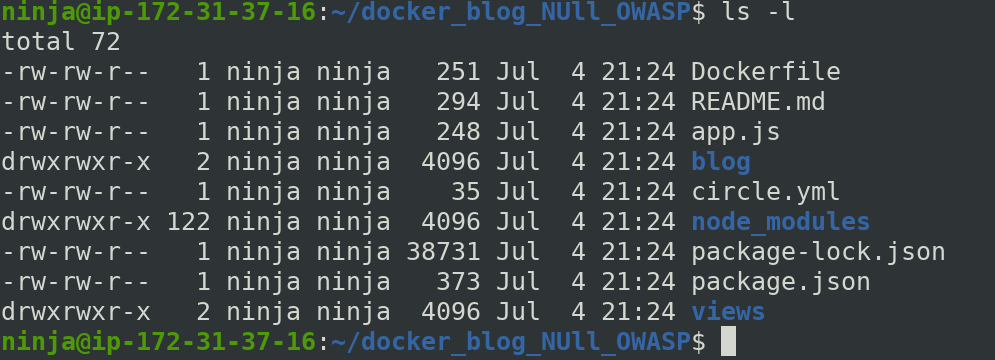

git clone https://github.com/r00trwx/docker_blog_NUll_OWASP.git

so we have clone our app code. Navigate to the docker_blog_NULL_OWASP directory

and do ls -l to see the cloned files.

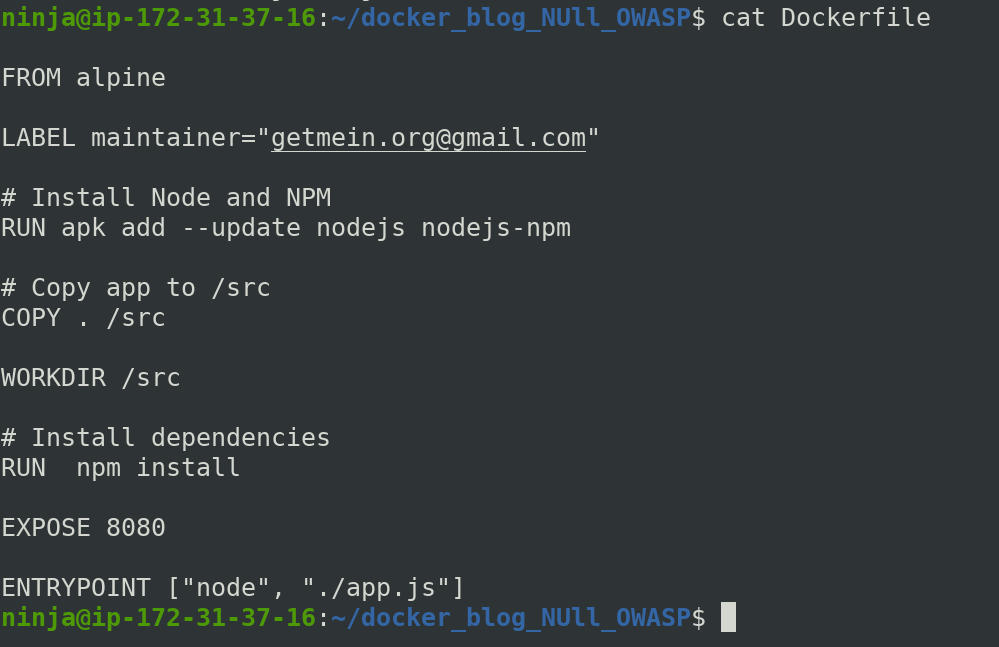

it has a Docker file here, lets see its content

$ cat Dockerfile

So remember, as I mentioned that Docker file is a blueprint for creating Docker images. Here is your blue print. It containes commadns like what softwares to install

see

# Install Node and NPM

RUN apk add --update nodejs nodejs-npm

even also we can mention all dependencies we need to install

# Install dependencies

RUN npm install

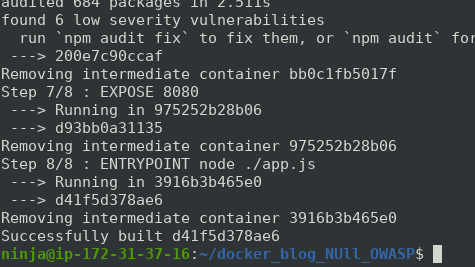

Finally lets build our image

$ docker image build -t blog:latest .

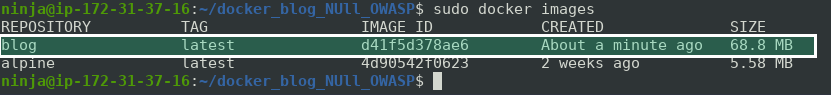

once your images is successfully built, Now lets check it inside our local repository

Yeahhh, it is there. Lets run it

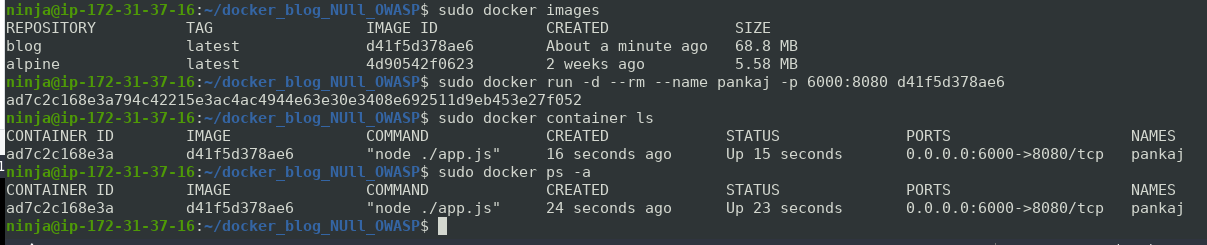

$ docker run -d --rm --name pankaj -p 6000:8080 d41f5d378ae6

Listing our running containers

Here

-d : detached mode means running container in background

--rm : It is used to remove the container when the base system restart or shutdown

--name : Gives name to our container, follow above image for more clarification

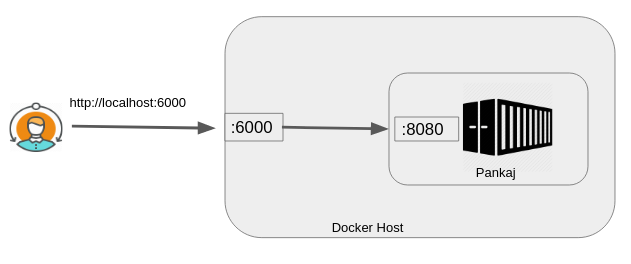

-p : To define ports

Above image is an example how the ports defined above are working. 8080 Port is the exposed port by the container and 6000 is the port which we bind to 8080 to access the application.

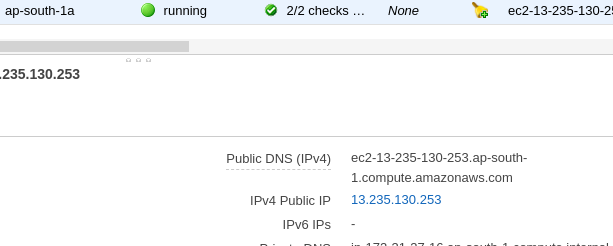

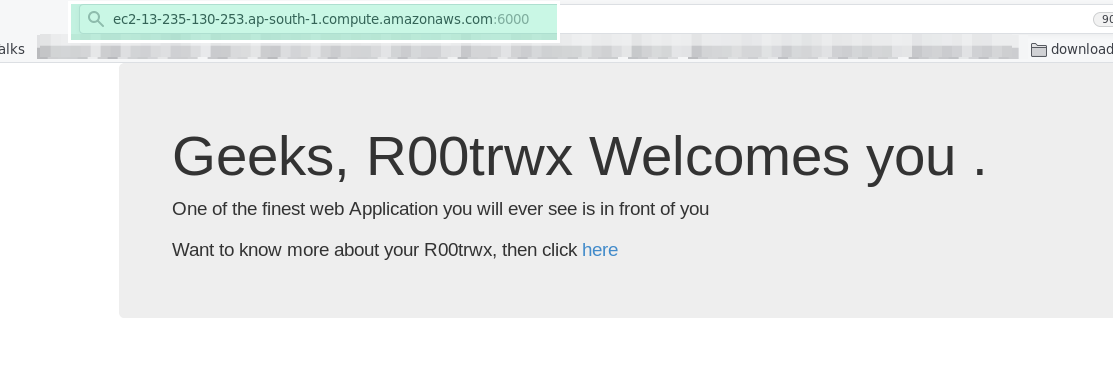

Now, Access the application using your EC2 instance public DNS (IPv4).

This is how our application looks like

If your are on your local machine, then access it using

http://localhost:6000